The Criminal, the Extortionist and the Dirty Cow

24 Oct 2016

With DevOps and Cloud computing practices becoming more and more commonplace in the IT industry, companies are starting to benefit from managing bigger infrastructure footprints and releasing software faster. IT organisations have to ensure their infrastructure and applications are secured and impenetrable from internal or external cyber-attack. How do you stay up to date with the latest practices to be more efficient in your work without compromising on security by taking short cuts? This two-part blog outlines what the biggest threats are and how DevOps practices can complement the security position of your organisation.

As I write this blog, the top three stories in the technology section of the BBC news website are stories about DDoS, zero day bugs in Linux and ransomware attacks. On Friday 21st Oct, Dyn servers were targeted in an attack where huge amounts of data were directed to them making their services inoperable. Dyn acts as a directory service for huge numbers of firms, it’s like an giant address book for the internet. The result meant that customers could no longer access sites like Twitter, Spotify, Reddit, Soundcloud and PayPal. In another article, we are told about the “Dirty Cow” bug which has been present in many versions of Linux for decades. While the final story explains how ransomware is the principal weapon in attackers’ armoury. Ransomware software that first takes over your network, then encrypts all your files before demanding a ransom - usually in Bitcoin - to unlock them.

Cyber criminals are a very real threat and some attacks have had catastrophic consequences for companies in the past. There is a lot of money to be made from cybercrime. Ransomware attacks spiked to $200M in the first quarter of 2016 compared to $25M in the whole of 2015. Accessing customer data is no longer just about the banking details, criminals are also using customer data and weaponising it for extortion purposes. Highly proficient, technical mercenaries are selling themselves as a Criminal-As-A-Service to the highest bidder in order to help implement and execute the cyber-attacks. These attacks are no longer targeted at the large companies, smaller companies are also at risk and have just as valuable data that can be used for extortion or ransom.

Gartner predicts that by 2020 a third of successful attacks experienced by enterprises will be on their shadow IT resources. Shadow IT are usually the innovators, on the bleeding edge of IT, implementing DevOps practices. How, as an industry, can we encourage the innovation but also enforce standards and stop the InfoSec employee from always saying no?

The scary truth is, with the majority of attacks that occur, they happen on pre-existing and known vulnerabilities, whether that’s an un-patched package on the server or an application exploitation. Take TalkTalk for example, a rather high profile attack that compromised 150,000 customers’ details. That attack was an SQL Injection attack on an application that had been known about for 3 years. The Information Commissioner’s Office (ICO) are starting to assert their authority and handed out a record £400,000 fine earlier this year for that misdemeanour.

Of all of the cyber incidents that the ICO dealt with last year, they can be categorised into the following list:

- Cyber security misconfigurations - unauthorised access of personal data due to inadequate security settings

- Exfiltration* - the unauthorised transfer of data to another location, controlled by the hacker

- Phishing - tricking people into revealing valuable personal details

- Distributed Denial of Service (DDoS) - DDoS attacks are a method of stopping a website or service from running and involve overloading the site so that the host cannot handle the volume of traffic, often used for extortion or as a distraction for another attack

- Cryptographic flaws - failing to use HTTPS secure encryption on websites which involve the collection and transfer of personal data

In 2015, Verizon surveyed 10,000 companies and found out that 1 in 5 of those companies were non compliant to industry regulation. Of those 2,000 breached companies:

- 45% were breached due to patch management and development security

- 72% were breached by log and monitoring management

- 73% were breached from holes in their firewall configurations

There are many reasons why it’s hard to stay compliant. Each industry has it’s own regulation guidelines and some regulations span many industries. Regulatory acts that come to mind are, Dodd Frank, Payment Card Industry Data Security Standard, (PCI DSS), Sarbanes Oxley (SOX) and Health Insurance Portability and Accountability Act (HIPAA) to name a few.

These regulations that are enforced are changing all the time. Companies can struggle to stay on top of all the changes especially with all the new technologies that are emerging all the time. Take Docker for instance, 3 years ago Docker had its first release and I am now seeing more and more companies embrace production Docker workloads. Adopters of this technology have had to find ways patch and evergreen containers and also come up with solutions to monitor and log their events. Occasionally, it has been Shadow IT that have embraced the technology, but they do not always apply the same standards and rigor to security around the implementation as other more traditional IT teams. Sometimes it’s just a lack of resources required to fix all the security holes, or even the scale of the problem is so large, that companies do not address them until a regulator imposes a mandate or a breach occurs making these teams reactive to the problems. Whatever the reason, implementing secure and compliant systems can often be an afterthought and seen as a drag on the velocity of the organisation.

Rather than making security an afterthought, or a bolt-on, it needs to be embedded in every single step of the build, test and release process. To do that you need to plan for it and sell it internally to your organisation to get the funding and the resources to implement it. The best way to do this is to come up with a business case of the costs involved in reacting to and resolving breaches. These costs can become very expensive. Here are some metrics that can be used.

Recovery costs - These include costs incurred during problem identification, analysis and resolution, and validation testing, as well as external support costs, regulatory fines and data recovery costs

Productivity costs - These are calculated as duration of outage × total persons affected × average percentage of productivity lost × average employee costs

Lost revenue - This is calculated as duration of outage × percentage of unrecoverable business × average revenue per hour

There are other hidden costs that can be factored into the equation, such as reputational damage and brain drain damage, when the IT staff don’t want to be associated with a high profile breach and leave the company after the event.

Modern IT needs to operate at scale, managing a dynamic infrastructure model. This must all be done without compromising on security or compliance. If that wasn’t enough, it needs to continually improve, which means that data needs to be collected and measured to assess performance and give feedback. Software can now be leveraged to define everything; including networking, storage, data centers and security. Applications, as well as infrastructure, can now be defined in software and managed as software.

Using DevOps practices means that security can become embedded in the process and they are not mutually exclusive.

When we start to automate IT at scale we need to have a solid foundation of infrastructure to host our applications onto. By that, I mean we need to have reproducible, consistent and hardened infrastructure and move away from the ‘snowflake’ servers and have a more standardised approach. The most practical and efficient way to achieve this is by managing your infrastructure with configuration management tooling.

When you start to use configuration management, you define the state of the server rather than the individual commands you need to run. This is all defined in code. Configuration tools such as Chef, Puppet and Ansible allow you to express the state in code and it manages the state of the server without manual intervention. The code is packaged into a service or patterns that get applied to the servers and you then get a high level of consistency of the state of each server. This code is responsible for applying the latest patches to packages and ensuring your organisation’s security settings are applied to all servers, also known as OS hardening.

The Center of Internet Security provides very comprehensive guides on how to secure base operating systems and have a list of benchmarks that you can assess your infrastructure against. The configuration management code is treated like any other application codebase and is checked into source control. The release of that code can be managed in a controlled pipeline into development, test and production environments. It is imperative that all the runtime components in your infrastructure have their security settings managed and enforced as secure. One of the biggest data breaches of the MongoDB was because simple authentication was not switched on and configured, which exposed terabytes of data to the internet. These are simple steps to implement, but often ignored.

One of the patterns that has emerged from DevOps is immutable infrastructure. Immutable infrastructure is an approach to managing services and software deployments on IT resources wherein components are replaced rather than changed. An application or services is effectively redeployed each time any change occurs. It is often easier to to replace a broken server with a new server rather than trying to fix a broken one in-place. The infrastructure and the networking components for the infrastructure can be defined by code using tooling such as Packer, Terraform, CloudFormation for AWS or ARM templates for Azure. These infrastructure provisioning tools allow you to define, in detail, the networking components that the infrastructure run in as as well as the RBAC controls given to these resources. AWS and Azure have very detailed guide on best practices to networking which you can apply in this codebase.

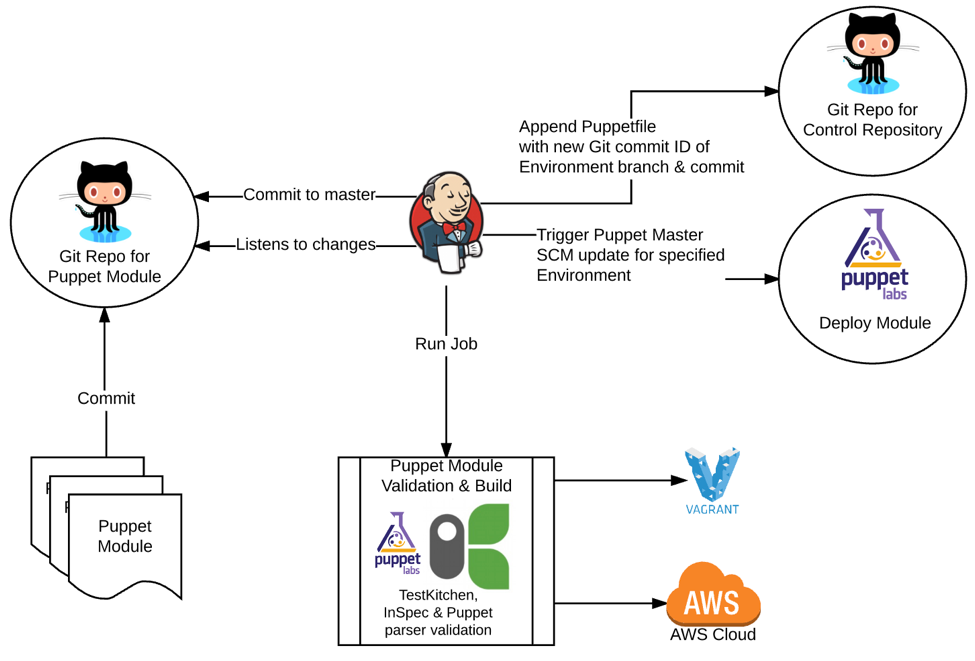

These best practices and tooling can be used in conjunction with Vault (to manage secrets, keys, passwords and certificates), can provide a very flexible and secure provisioning process. This code can be executed in the release pipeline so the infrastructure can be brought online on demand or at scheduled times. Within this pipeline you can continually validate the compliance of the node by using tools such as InSpec, a tool which automates the continuous testing and compliance auditing of your infrastructure. When a verification check fails then the infrastructure will not progress in the pipeline. Here is an example architecture using InSpec in the pipeline:

If you are managing Docker containers then I recommend reading a blog from Ben Wootton one of our blogs Docker Security: What You Need to Know. The Dirty COW vulnerability also affects containers, read about how it can be fixed here Dirty COW - (CVE-2016-5195) - Docker Container Escape.

What we do with immutable infrastructure is divide our application infrastructure into two components, things that are data and things that are everything else. For those things that are everything else we never replace or we never make updates in place. This type of operating model also can involve re-architecting systems into 12-factor apps, stateless services and micro-service architectures. Take a look at my previous blog Building a Twelve Factor Application to find out about 12-factor apps. With this type of architecture, we create a new version definition that says what is the state of the thing we want and then we launch in parallel. Once that thing is in place then we go ahead and destroy the old infrastructure. There are different deployment strategies that work well with immutable; Blue/Green, Canary and Phoenix. When you start down this path where you end up with the tool chain that is pretty flexible and resilient. It forces you to respond to failure and build resilience into the applications, infrastructure and the architectures you implement.

When you start working in this manner, you are able to begin building a culture that enforces all changes existing in source control. If you make a change outside the bounds of that infrastructure code base that you’ve defined, your configuration management framework is going to undo that change. If the configuration management framework doesn’t undo that change, well that server is probably not going to live anyway so any changes you’ve made by hand are going to go away.

So, we get to a place where out of band changes are going to be lost. It means that a new type of culture develops. Whether your Devs or your Ops, suddenly you’re operating in this model where there is a big giant compliance engine insuring that we have an auditable trail of actions and that everything is captured. We get to a point where we’re suddenly working in ways that InfoSec has wanted us to work for years. It is no longer an afterthought to make sure that you are in compliance; it is the default way of working with your infrastructure.

This is great, we can now guarantee the state of our infrastructure and networks, but what about our applications and data? DevOps gives companies the opportunity to improve application security, however they often fail to realise that potential. Application security is often not enforced due to lack in knowledge in application security practices and lack of basic hygiene in the software delivery lifecycle. Basic application security testing is not baked into the release pipeline. This is sometimes referred as Shifting Security Left, Shannon Leitz from Intuit has a great post explaining this concept.

There are many tools that expose security vulnerabilities through static analysis, such as: Snyk, FindBugs and SonarQube. The Open Web Application Security Project (OWASP) is a great resource to find out about these types of tools that you can use on your applications. There are also tools in the market that allow your developers to find security, and operational, issues in application code at development time rather than in a later deployment phase. Tools such as from vendors such as New Relic allow developers to spot issues early and remediate them. In order for developers to be invested, they have the right tools at their disposal and receive the relevant training on how to code secure applications.

With the increased adoption of ransomware, we now have to get smarter and more efficient with encrypting and backing up our data so that if you are compromised you can tear that infrastructure down and rebuild a new stack based off configuration management code, plus the most recently backed up data. There are some really cool practices developing in the serverless space where functions are being created to backup and restore data on real-time basis using Lambda and DynamoDB. Keep an eye out for developments in this space.

As I have discussed, there are a lot of vectors to address when it comes to addressing security and DevOps practices. We have the opportunity to add more consistency and security into our IT estate using DevOps and automation, however it is up to the organisations to invest in the time and education of IT staff to be able to implement these practices. Further resources for security and DevOps can be found here at awesome-devsecops.